Pretrial nudges

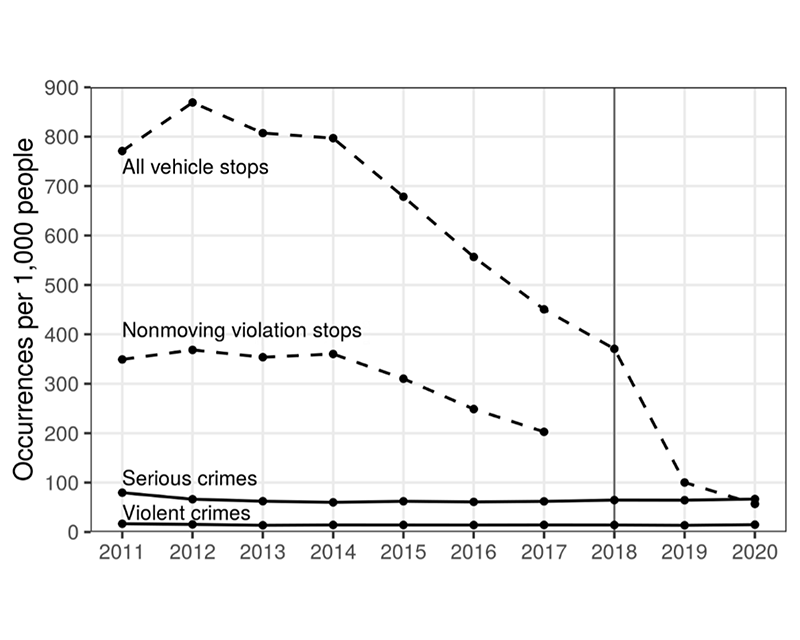

Failing to appear in court can land a person in jail and cause them to lose their job or housing. But many people fail to appear (FTA) simply because they forget about their court date.

In a randomized controlled trial at the Santa Clara Public Defender’s Office, we found that text message reminders reduced FTA-related jail involvement by over 20%. Our findings extend previous studies which found that text message reminders can help people show up to court. Our study was published in Science Advances in 2025.

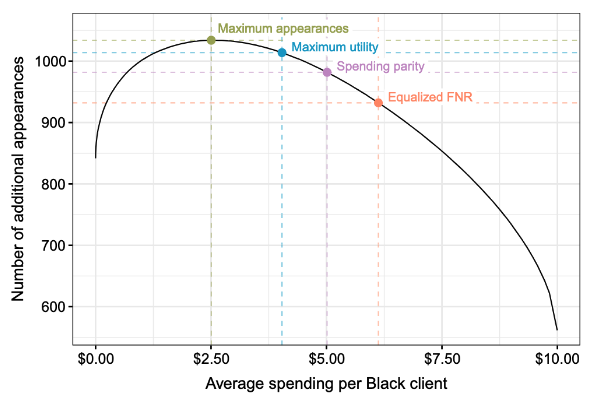

We’re now testing whether the standard consequences-focused reminder hurts or helps certain clients, and whether monetary assistance can help clients overcome financial barriers to court attendance.